Advertisement

The world of large language models (LLMs) is expanding rapidly, but not everyone has access to the hardware that makes it all run smoothly. Traditionally, NVIDIA’s CUDA-based GPUs have been the go-to choice for developers and researchers looking to fine-tune or deploy LLMs. But that’s changing. AMD has stepped in with a solution that not only works but is now supported right out of the box—thanks to Hugging Face and the ROCm ecosystem.

That means no workarounds, no obscure fixes, and no starting from scratch. Just install and run. Let’s look at what this new AMD + Hugging Face pairing really means, how it works, and what you need to get started.

You may be thinking, what specifically makes AMD stand out in this arena? It's not merely price or hardware specifications—it's the open-source foundation of the ROCm (Radeon Open Compute) platform. Unlike closed solutions, ROCm is built to be transparent and flexible. It makes it possible for users to execute PyTorch-based models without rewriting their codebase or having to use less-supported tooling.

Why does that matter? Because it makes LLM development more available to a broader group of users—those who've been excluded either because of cost or compatibility. The threshold for entry decreases radically when an alternative becomes accessible and well-supported.

Up until now, if you had an AMD GPU and wanted to run something like a 7B or 13B parameter model, you’d face compatibility issues or poor performance. Now, with proper support from Hugging Face Transformers, AMD GPUs (like the MI250 or even consumer-level cards with ROCm support) can handle the same workflows that were once exclusive to NVIDIA.

The most straightforward way to get started is by using Hugging Face’s transformers library with PyTorch. AMD support comes in through ROCm, which now includes functionality for running transformer models efficiently. No need for custom kernels or backend rewrites.

Here’s a quick look at how the pieces fit together:

PyTorch with ROCm: AMD has worked closely with the PyTorch team to make sure ROCm is fully integrated. This includes support for FP16 and BF16 precision, which are standard in LLM inference and training.

Optimum Library: Hugging Face offers optimum, an optimization toolkit that includes AMD-specific implementations. This lets you load, quantize, and run models using ROCm without diving into low-level tuning.

Out-of-the-Box Models: Many popular models, like LLaMA, Falcon, and BLOOM, can now be deployed on AMD GPUs with little or no modification. Just load them from the hub, and they’ll run as expected—assuming your hardware is supported.

This isn't just about making something technically work—it's about delivering a smooth experience from installation to inference. And that's exactly what Hugging Face and AMD are now offering.

Let’s go through the actual steps to get everything running on an AMD GPU.

Before anything else, confirm that your AMD GPU supports ROCm. As of now, ROCm 6.0+ supports:

Consumer GPUs, such as the RX 6700 XT, may work but are not officially supported for all workloads.

AMD provides pre-built packages for Ubuntu. You can follow the official guide, but here’s a condensed version:

bash

CopyEdit

sudo apt update

sudo apt install rocm-dkms

After installation, reboot your system and confirm that the drivers are loaded:

bash

CopyEdit

rocminfo

You should see your GPU listed, along with details like memory and compute units.

It’s best to isolate your LLM work in a dedicated Python environment:

bash

CopyEdit

python -m venv amd-llm

source amd-llm/bin/activate

pip install --upgrade pip

First, install ROCm-compatible PyTorch:

bash

CopyEdit

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/rocm6.0

Then install Hugging Face libraries:

bash

CopyEdit

pip install transformers accelerate optimum

The optimum package includes the bits that make AMD acceleration possible without needing to modify the core model code.

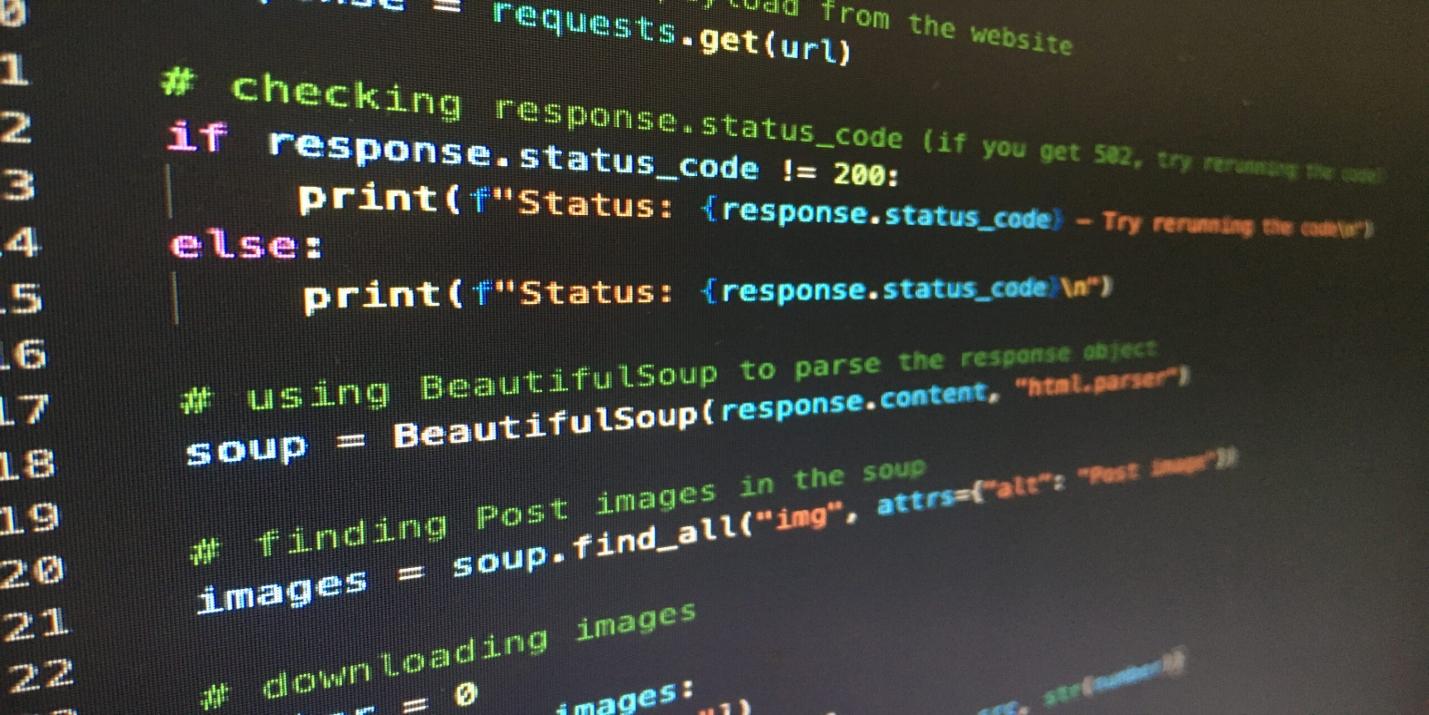

You can now use transformers just like you would on an NVIDIA GPU:

python

CopyEdit

from transformers import AutoTokenizer, AutoModelForCausalLM

tokenizer = AutoTokenizer.from_pretrained("tiiuae/falcon-7b")

model = AutoModelForCausalLM.from_pretrained("tiiuae/falcon-7b").half().to("cuda")

prompt = "What's the weather like in Paris?"

inputs = tokenizer(prompt, return_tensors="pt").to("cuda")

outputs = model.generate(**inputs, max_length=50)

print(tokenizer.decode(outputs[0], skip_special_tokens=True))

Yes, the .to("cuda") still works—AMD’s ROCm uses a compatible backend, so PyTorch functions as expected.

AMD GPUs now support mixed-precision (FP16 and BF16), model parallelism, and large batch sizes. This makes them viable for real-world deployments and training, not just experiments.

That said, not every model will run equally well across all hardware. ROCm is catching up fast, but CUDA still holds the edge in broader ecosystem support. Still, benchmarks show that for models like Falcon, LLaMA, and BLOOM, AMD GPUs perform competitively.

You’ll get better performance when using models that have been optimized with optimum, and even more so when paired with ONNX export or quantization techniques.

AMD GPUs paired with Hugging Face support bring LLM acceleration to a much wider group of users. No CUDA, no hacks, and no compromise on quality. If you’ve been locked out of high-end model development due to hardware limitations, this changes things. The setup is straightforward, the performance is there, and the flexibility is built-in. AMD isn't just catching up—it's making LLMs easier to access and run on your own terms.

This shift also encourages broader hardware diversity in machine learning workflows, which benefits the entire open-source ecosystem. Developers can now build, test, and deploy without being tied to a single vendor. As ROCm continues to mature, the gap between AMD and its competitors is shrinking fast.

How fine-tuning Llama 2 70B using PyTorch FSDP makes training large language models more efficient with fewer GPUs. A practical guide for working with massive models

Compare Excel and Power BI in terms of data handling, reporting, collaboration, and usability to find out which tool is better suited for decision making

How Würstchen uses a compressed latent space to deliver fast diffusion for image generation, reducing compute while keeping quality high

Discover the top 8 most isolated places on Earth in 2024. Learn about these remote locations and their unique characteristics

How Enterprise AI is transforming how large businesses operate by connecting data, systems, and people across departments for smarter decisions

How Making LLMs Lighter with AutoGPTQ and Transformers helps reduce model size, speed up inference, and cut memory usage—all without major accuracy loss

Explore the most unforgettable moments when influencers lost their cool lives. From epic fails to unexpected outbursts, dive into the drama of livestream mishaps

Discover the top ten tallest waterfalls in the world, each offering unique natural beauty and immense height

Swin Transformers are reshaping computer vision by combining the strengths of CNNs and Transformers. Learn how they work, where they excel, and why they matter in modern AI

How Rocket Money x Hugging Face are scaling volatile ML models in production with versioning, retraining, and Hugging Face's Inference API to manage real-world complexity

Explore the key differences between Frequentist vs Bayesian Statistics in data science. Learn how these two approaches impact modeling, estimation, and real-world decision-making

What data management is, why it matters, the different types involved, and how the data lifecycle plays a role in keeping business information accurate and usable