Advertisement

Every AI model starts in a specific framework—PyTorch, TensorFlow, or something else. But what happens when you want to use that same model somewhere else, maybe in a different environment or language? That’s where things used to get messy. The ONNX model format changes that. It acts like a common passport for neural networks, letting them travel between platforms without breaking.

Whether you're working on cloud deployment, edge devices, or just trying to streamline collaboration between teams using different tools, ONNX makes the transition smoother. It's not a framework—it's a bridge designed to save time, reduce duplication, and give your model more freedom to go where it's needed.

ONNX works by providing a standard format to represent deep learning models. This format defines both the computational graph and the operations used in the model. In simple terms, it describes the structure (like the layers in a neural network) and the behavior (like how inputs are transformed into outputs) in a way that different platforms can understand.

The ONNX model is stored in a .onnx file, which is essentially a serialized protocol buffer (protobuf). This file includes the model architecture, trained weights, and metadata. When you convert a model from PyTorch, for example, you export it to ONNX using built-in functions. Once exported, this file can be run on any framework that supports the ONNX runtime.

A major reason this works is because ONNX includes an expanding list of supported operations (known as the operator set or "opset"). These operations are defined in a way that's framework-independent, which means as long as your model uses supported operations, conversion and execution remain seamless.

There’s also something called the ONNX Runtime. This is a high-performance engine developed by Microsoft, optimized for both training and inference. It supports various hardware accelerations such as CUDA for NVIDIA GPUs or DirectML for Windows. ONNX Runtime allows you to deploy models in production with less overhead while maintaining strong performance.

ONNX supports both traditional deep learning tasks, such as image classification, and more complex pipelines involving multiple models or custom preprocessing steps. For edge devices or platforms with limited resources, ONNX helps by reducing the need for large dependency stacks. Instead of bundling a complete deep-learning library, you can use a lightweight runtime.

One of the strongest cases for ONNX is its ability to make models framework-agnostic. This means you can train a model in PyTorch, export it to ONNX, and deploy it using TensorFlow Lite, OpenCV, or even in a C++ environment without touching the original training code again. This flexibility helps teams use the best tools for each part of a project—training with one and deploying with another.

Speed is another benefit. ONNX Runtime has been benchmarked to show faster inference times in many real-world use cases compared to native framework runtimes. This is especially noticeable when working with large models or deploying at scale.

Another key point is stability. Since ONNX defines the model in a static graph format, the behavior becomes more predictable. Dynamic frameworks like PyTorch work well during experimentation, but for deployment, a frozen computation graph like ONNX provides consistency and control.

ONNX also simplifies the model auditing and versioning process. Since the format is consistent and framework-independent, you can store and compare different versions of a model more reliably. This helps in industries where model tracking, reproducibility, and compliance matter.

Collaboration improves as well. Data scientists and engineers often use different tools. With ONNX as the bridge, you don’t need everyone on the team to work in the same framework. That reduces friction in development and speeds up project timelines.

There is growing support for ONNX in cloud environments as well. Platforms like Azure Machine Learning and AWS SageMaker offer built-in ONNX compatibility, which makes deploying at scale easier. As model monitoring and optimization tools catch up, ONNX's role in the production lifecycle will only grow.

Like any shared format, ONNX has limits. One challenge is that not every operation from every framework is supported. If you’re using highly custom layers or experimental features, converting to ONNX can throw errors. While the operator set keeps expanding, there’s a delay between new features being introduced in frameworks and being available in ONNX.

Another concern is debugging. Once a model is in ONNX format, it becomes harder to inspect, modify, or retrain without going back to the original framework. This means you have to be confident that your model is production-ready before exporting it.

Also, while ONNX Runtime performs well, it sometimes needs tuning to take full advantage of available hardware accelerators. Compared to native deployment tools like TensorFlow Lite or TensorRT, ONNX can require more manual configuration in certain edge cases.

That said, ONNX is steadily becoming more than just a converter tool. There's active work on supporting training directly through ONNX. That means the ONNX format could serve as the core model structure from end to end—from development through deployment and even retraining.

Community involvement has been strong. It's an open-source project backed by major players like Microsoft and Meta, which means updates are frequent and issues are taken seriously. New tools, such as Netron (a visualizer for ONNX models) and open-source exporters for additional frameworks, continue to expand the ecosystem.

As machine learning projects move toward reproducibility, transparency, and portability, ONNX offers a practical path forward. It’s not about being the best framework. It’s about letting frameworks speak the same language.

The ONNX model has become a reliable solution for making AI models portable, flexible, and easier to deploy across various platforms. Offering a shared format removes the friction between different machine learning frameworks and simplifies collaboration between teams. With strong support from the AI community and ongoing development, ONNX continues to expand its capabilities while maintaining performance and consistency. Though it has some limitations, the practical advantages make it a smart choice for real-world applications. As the demand for cross-platform AI solutions grows, the Open Neural Network Exchange is positioned to play a central role in modern workflows.

How to use transformers in Python for efficient PDF summarization. Discover practical tools and methods to extract and summarize information from lengthy PDF files with ease

Explore how GPTBot, OpenAI’s official web crawler, is reshaping AI model training by collecting public web data with transparency and consent in mind

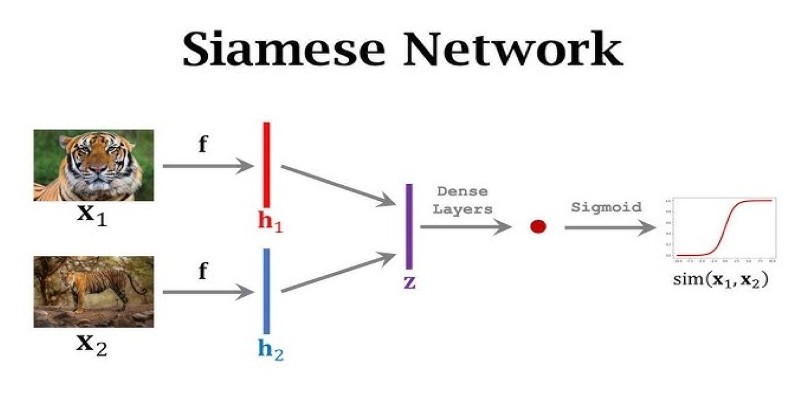

What makes Siamese networks so effective in comparison tasks? Dive into the mechanics, strengths, and real-world use cases that define this powerful neural network architecture

Explore the range of quantization schemes natively supported in Hugging Face Transformers and how they improve model speed and efficiency across backends like Optimum Intel, ONNX, and PyTorch

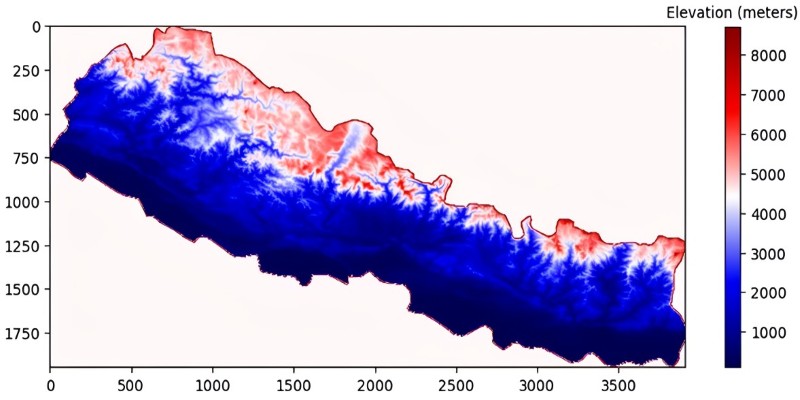

Discover the top ten tallest waterfalls in the world, each offering unique natural beauty and immense height

How Enterprise AI is transforming how large businesses operate by connecting data, systems, and people across departments for smarter decisions

How Rocket Money x Hugging Face are scaling volatile ML models in production with versioning, retraining, and Hugging Face's Inference API to manage real-world complexity

How AI in food service is transforming restaurant operations, from faster kitchens to personalized ordering and better inventory management

How Würstchen uses a compressed latent space to deliver fast diffusion for image generation, reducing compute while keeping quality high

How fine-tuning Llama 2 70B using PyTorch FSDP makes training large language models more efficient with fewer GPUs. A practical guide for working with massive models

How the ONNX model format simplifies AI model conversion, making it easier to move between frameworks and deploy across platforms with speed and consistency

Discover the top 8 most isolated places on Earth in 2024. Learn about these remote locations and their unique characteristics